Recently, @pinwizj and @PinballProfile posted on facebook and pinside the number of calendar event submissions for January 2018…

The numbers show improvement in the number of submitted January events in 2018 relative to number of completed events in January 2017, suggesting that the number of IFPA events grew despite the new endorsement fee. However, many commenters quickly pointed out several issues with making this comparison. For one, it does not take into account year over year growth trajectory. The number of events has been increasing for years, so an increase from 2017 to 2018 is not surprising. The more interesting question is whether the growth seen from 2017 to 2018 is bigger or smaller than would be expected. A second issue pointed out is that the comparison does not take into account the fact that the state championship occurs in January this year, whereas it occurred in February in previous years. Thus 2018’s January numbers are going to be a bit padded.

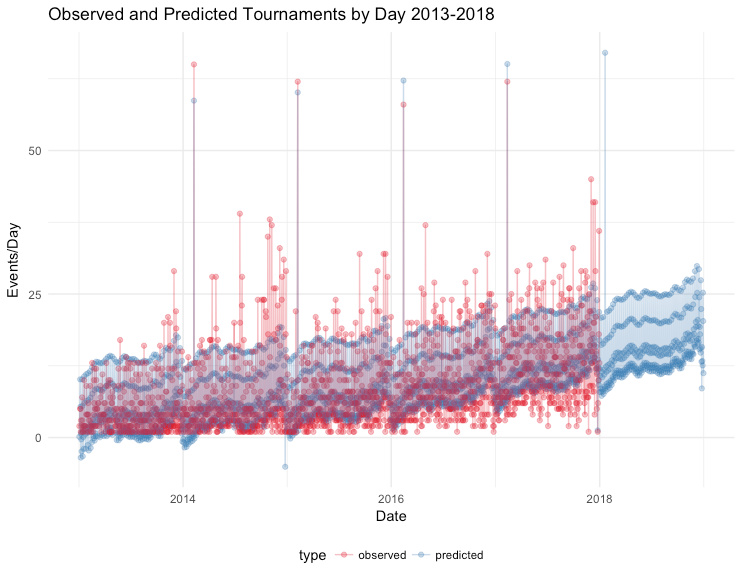

To address these issues, I applied a forecast model to the IFPA tournament data. The forecast model attempts to account for several effects common in time series data, such as annual growth, seasonal effects, and weekly effects. Importantly, it also can account for special days (Christmas,New Year, etc.) which uniquely influence the data. In this case, I directed the model to account for the SCS finals, which is historically the biggest tournament day of the year in terms of number of events. Below you can see the model make predictions on the number of events held each day, from 2013 to 2018.

There are several interesting effects that the model picked up on.

- The steady increase over time indicates annual growth in the number of tournaments from year to year.

- The “wave” pattern that occurs throughout each year indicates seasonality. There is a rapid dip at the beginning of each year, a recovery around spring, another slump during summer, then a rapid increase in the number of events at the end of the year. Many of these fluctuations can be attributed to the SCS– the end of year run can be attributed to last chance qualifying events, and the dip at the beginning of the year is a correction to the norm.

- The multiple “bands” that appear are the result of “weekly effects” – far more tournaments are held on saturday and sunday than other days of the week. (Interestingly, Tuesday is the most common day for weekday events)

- The model sometimes does dumb things like predict negative tournaments.

- Finally, on one special day every year, there is a huge spike in tournaments. This is SCS finals day.

While the actual data is rather noisy, the forecast plot smooths this noise out. It can’t predict random swings and fluctuations in the data, but it gives a nice estimate that is “in the ball park”.

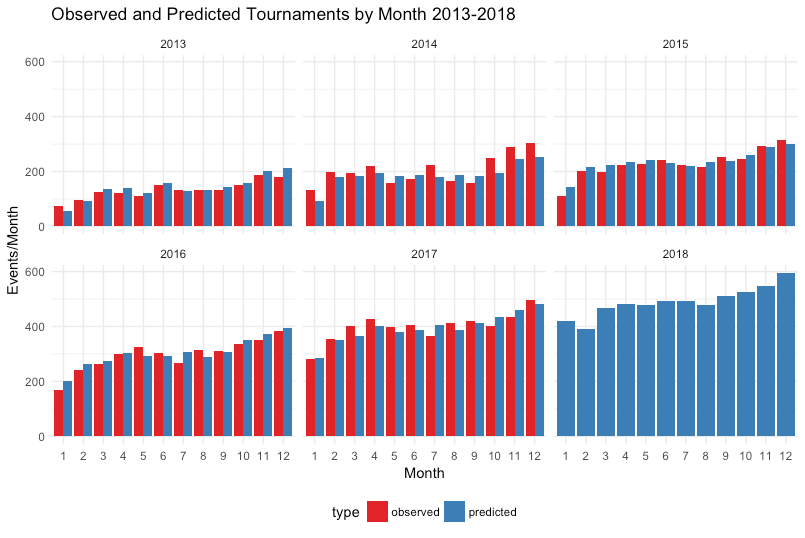

In the figure below we break down the numbers according to month.

When expressed as monthly sums, we see that the forecast model does an excellent job at predicting the number of tournaments to be held each month.

The really interesting/relevant stuff is in the 2018 predictions:

- The model predicted about 421 tournaments in January 2018. Thus, when accounting for year over year growth, and the SCS effect, the actual number of events that were submitted to the IFPA calender (373) falls a bit shy of the predicted number. Keep in mind, the model doesn’t know anything about the WPPR fee, it’s just assuming business as usual.

- The model predicts that a slump will occur in February 2018, likely due to there not being a SCS finals that month.

- It is likely that we will crack 500 events in a single month this year. (!!!)

Overall, the analysis answers some of the issues addressed earlier. When the year-to-year growth and SCS finals effects are accounted for, we see that the growth observed thus far in January 2018 is shy of what would be expected. However, if this is the result of a negative WPPR fee effect, it appears to be modest at best. Of course, it is important to point out that the 373 events are only on the calendar– if many of these events are cancelled or the directors fail to submit the results, then the IFPA will fall well short of the projected forecast. At the end of the day, the most important part is that we are continuing to see positive growth.

I’ll update this post in a couple months to see how well these predictions play out!